The new draft Personal Data Protection Bill – Draft 2022 is introducing mandatory user verification as a part of platform and other fiduciary responsibilities. So to access anything, even FB & Instagram, you may have to provide your Aadhar card or other identity proof. Medianama organised a round table on exploring the user verification mandate, which was chaired by researchers, practitioners, technologists and lawyers from across the landscape. Below are my notes from the conference.

(PS: As notes are, this is preserved in all the spelling mistakes and typo glory, please excuse.

The notes are meant to echo was was said as best understood by me as a legal lay person. These are not representative of my beliefs or opinions, although I do agree with most.)

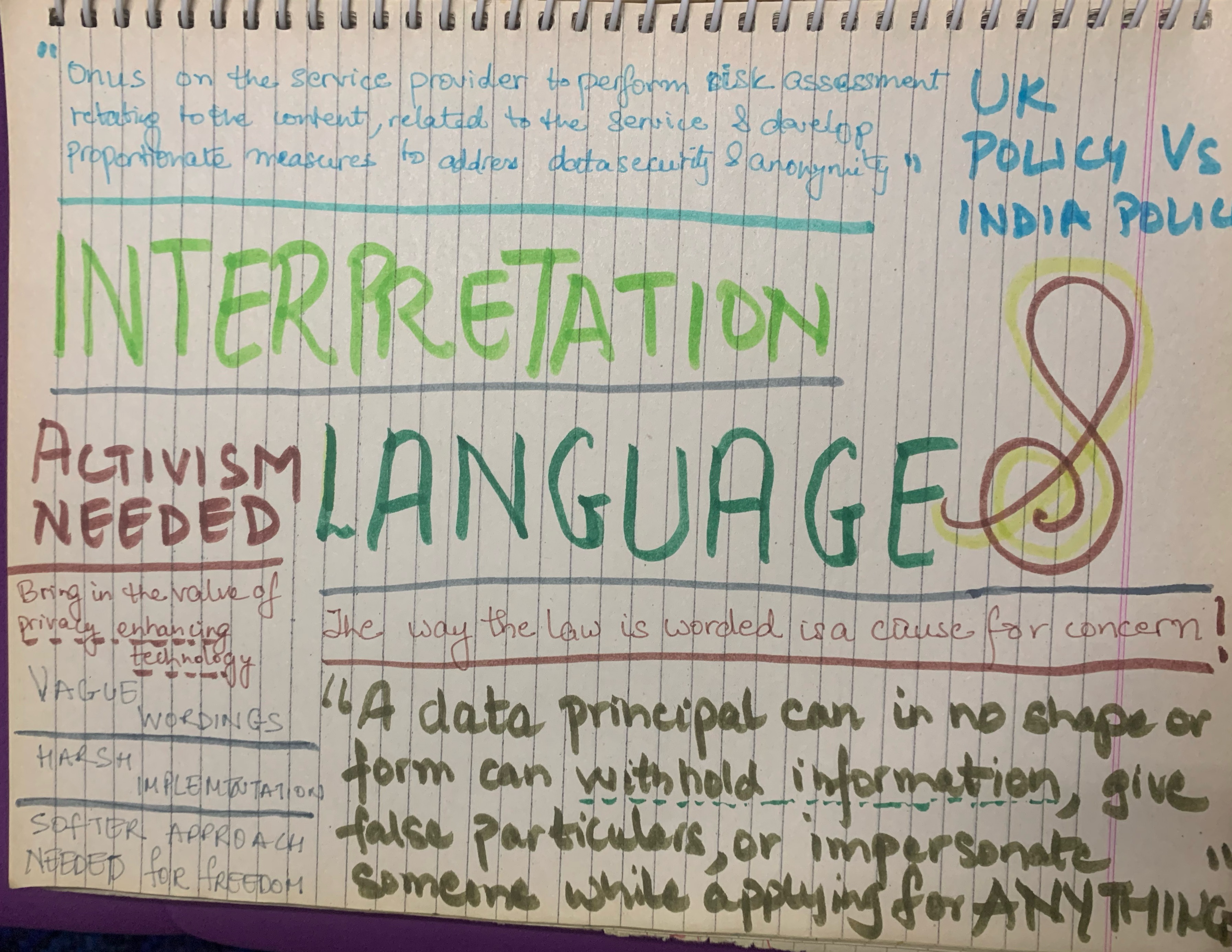

Artefact 1: The wording of the bill is vague and the implementation of it into real life circumstances can be termed as harsh. Where the UK data policy, which also puts protection responsibility on the platforms’ or service providers’ shoulders, asks them to ‘perform risk assessment relating to the content, the service and develop proportionate measures to address data security & anonymity’, the India PDP does not define a structure but provides ‘ritualistic incantations’ to give suo-motto access to any data for the government and government agencies, even withdrawing the right to a copy of the data and rights to erasure for the said data for the data principle or the end user.

It also lands the responsibility squarely on the shoulders of platforms by ensuring verification, protection & deletion of data thereby eroding the premise of safe harbour.

Artefact 2: An example can be seen in the new Cloud Service provider registration and onboarding. It asks disproportionate questions that mimic underwriting for financial services just to verify the identity of the cloud space buyers. The feasibility of asking random hard questions, the scalability of the same questionnaire to all cloud entrants, and the ambiguity of the questions to be asked leaves a person wondering if we are indeed addressing the problem to be resolved, which is to address the buying of cloud space for unlawful purposes.

CNAPs, the real name identification of all mobile callers is also a case in point. The proportionality gap and the absence of correlation with harm is clearly visible. Did we agree to displaying our name everywhere when we signed up with a telco? How will fraud be prevented if IDs are being faked so commonly today?

History of Identity Verification

No data is inherently private or public, context makes it so.

It’s not only about the system, but the ecosystem around it.

Artefact 3: The personal nature of data is deeply linked to the context around it. In the pre-digital era, phone numbers & addresses were published in public directory available everywhere. In fact, in 2004 TRAI was about to release a mobile number directory publicly but was apposed by DoT on the grounds of privacy. It would be a billion numbers today!

Today the phone number is secret because it is used in banking and other financial transactions. The ramifications of adding more data availability around it like names or addresses is likely to erode not only privacy but security of the individual. Even adding the name lowers the entry barrier for fraudsters.

Mobile numbers are voluntary, but mandatory.

If you receipt is being delivered on your phone, not providing the phone number will be giving away your right as a consumer.

Artefact 4: After the 2017 Puttaswamy judgement we officially entered a new era of privacy jurisprudence. The norms that existed earlier have changed. What you have acquiesced to in the past can no longer be established without context today. The foreseeability of harm is now included in the decision making. The question to ask for any decision is ‘What is the differential in privacy that it has achieved?”.

Exploring the mandate

Artefact 5: The removal of data post it being verified is also mandated by the bill. Although from the point of view of recourse, in the case of a mishap it does not make sense to delete it, there seems no mention of how recourse will be taken or given if something like the ‘blue whale challenge’ comes up. There is no mention of aggregation, or anoanomisation of insights from the data. Although the process of deanonomisation is fraught with risk, we can forsee many circumstances in which it may be prudent to be able to use the data for cyber forensics. What will happen when people demand recourse for a cyber crime?

Artefact 6: The verification of children has been made mandatory and ‘verifiable’ permission from a guardian will be needed for all kids below a certain age. Relationship verification on the other hand is not been mentioned which mandates that everyone validate their identity before signing up for services or platforms.

Unlawful & inappropriate content for kids has not been defined as a type of harm by the bill which mentions harm without defining it fully, only alluding to it as ‘harrassment’. In addition the joint parliamentary committee had recommended that the central government can add to the list of harms, which is not mentioned by the bill at all. In face ‘harm’ or ‘significant harm’ is left to interpretation and can be misconstrued.

Risks should be weighed in before verification of identify for kids as the personal data of children requires higher security. Higher standards of privacy like Differential Privacy & the ‘poisoning’ of data, Homographic encryption and Aggregation were available as tools to additionally secure data but are not specified by the bill. There are many ways to ensure that technology can be used to identify the age of children using the principles of ‘Zero Knowledge Proof’ where identify is being withheld but age is being verified.

Artefact 7: Both Ex-Ante and Ex-Post ramifications of data theft should be considered before the government passes the mandate for collection of data for compliance. It is assumed that identity will act as deterrent and mandates disclosure without creating the environment of security and trust. Inceasing data sensitivity in people who attempt to understand it without addressing the reign of fear.

Artefact 8: The bill mandates verification & authentication for everyone but does not provide for portability of data legally. Each different verification and the sharing or personal data with different service providers increases the surface area of risk for a data breach.

The Mechanics of Fraud

Artefact 9-10: Even one ID can give fraudsters up to 5 months of runway & small frauds add up. In fact 2-3 IDs per year are enough to keep a fraudster in business.

With each member earning upto 2 lakh rupees a month, they have access to billions of dollars of investment and a stack that combines human social engineering with the manipulation of tech to ensure that the victim doesn’t even realise they have been scammed.

Studies done by Dwara research have shown that people who even suspect fraud still engage with it because of social pressures and engineering of those pressures around them. Most recently Alok Bajpai, a start-up founder and an influencer has been compromised and the scammer is asking friends and family for money as if he’s asking for it. Of course using the persona he has to create social pressure on those who are being scammed. Because of it being small amounts, it does not create that big a barrier for people to comply.

Artefact 11: IDs, SIM cards and investment is easy to get for the new start-ups of fraud and profit calculation is done on the basis of income and expenditure. IDs are available for 1 paisa per ID or they can be forged as well for a low cost, thus providing the best ROI in the start-up ecosystem.

A 2 people gang caught last year had compromised 305 bank accounts, 10 payment bank accounts, 12 Phone pe wallets and 10 Ola money wallets. They had engineered the scam so that 91 bills of Punjab State Power Corporation were paid by the gang. No one who had been compromised knew about it.

NEGATIVE REINFORCING FEEDBACK LOOP – WILL TIP THE EQUILLIBRIUM AGAIN TOWARDS THE FRAUDSTERS. NEEDS TO BE ADDRESSED AT A SYSTEMIC LEVEL

Artefact 12: The ask for more verification is like an ARMS RACE where the people with access to technology and funds are moving faster than regulation can percolate. The political economy of standards ensures that companies have to spend time and money to comply, and by the time they comply, the fraudsters are already ahead and have cracked the code. The common man suffers in this equation because the overloaded authorities don’t have enough time to deal with small time fraud as opposed to big breaches of crores.

Verification & Anonymity

Artefact 13: Anonymity has been the hallmark of the internet where whole generations have interacted fearlessly to engage with science and tech. It is the current default with no user verification and the ability to be anyone by just changing your name and picture. This the platforms allow for which allows people to hide their identity while engaging free speech and interaction with fellow internet users.

Pseudonymity has supported the rise of online communities for minorities and sub minorities who can now use the internet to not only connect to other similar people but also make their opinions and voices heard without the fear of backlash from society. It has supported the use of the internet to leverage it for good of the world. Pseudonymity also has a precedent where it has often been mediated by publishers for authors to publish and reach people under another name with the real name being known only to publishers.

With the new rules for mandatory verification, considerations need to be given to phishing, identity theft and revenge fraud. Is fraud becoming easier with the surface area of breach increasing?

What will happen to service providers we have already verified ourselves with like our banks, education institutions, especially telcos, will they be able to use our verified identities because of this bill? Should the default be opt out, till every user can willfully and knowingly verify themselves for the purpose of sharing. Or opt out of the service altogether.

Artefact 14: Anonymity as an accepted concept as it is defined by David K as ‘The condition of avoiding identification.’ which can be extended in the digital realm as ‘To avoid being identified by using pseudonyms.’ Anonymity can also be defined as ‘The degree of difficulty in deanonomization’ in the new mandate. Infringement and the attempt to deanonomize must be lawful.

Anonymity is both intrinsically a part of privacy as a concept and is necessary for free speech on the internet for everyone. In the case of ‘Me too’ & ‘Glassdoor’ it helps protect the identities of those who are exposing wrong doing, or giving an anonymous reviews. Curbing anonymity will severely hurt the ability of marginalised communities to navigate the internet and to use it for their own good.

Artefact 15: The mandate for user verification raises some questions. Is absolute anonymity not legal anymore? Should everyone be identified with service providers and be anonymous to the world if they want? How is this concept differentially applied to places where the category of data is more sensitive than others?

Artefact 16: Since anonymity is so closely linked to privacy, when doing a differential privacy analysis it is important to understand who the anonymity is against. Is it against the public? the service provider? auditors? regulatory authorities? or the government? This is also important when calculating the harm to privacy through mandatory disproportionate user verification.

Artefact 17: Anonymity is a rights issue. The right to privacy automatically implies anonymity by default unless we are identified because of ‘reasonable restrictions’. The said restrictions should be cognisant of current laws. In fact one of the considerations in imposing such restrictions should be a consideration of ‘stakes by law’. Does it break a currently existing law else where in the constitution is a big question to ask. The internet is an emergent system. One that should shape itself for the current legal landscape to fit into societal norms already established.

Equally important is to understand that by being anonymous are you giving away the right to recourse and redressal if a cyber crime was to happen to you?

The Taxonomy of Trade Off

Artefact 18: We are used to trading off our privacy for security – case can be taken of airport security where your privacy is breached and your person & personal belongings can be seen and analysed by someone who is deciding whether it is safe for you to travel with a hundred other people or not. It gives you a perception of security because you can rest assured that others are subject to the same checks. The airplane is safe.

Cut to today, your personal data is your responsibility. To trade it off, there should be proportionality in the transaction, you should be getting something of the same or similar value in return. It cannot be that you have to trade your DNA to get a bank account.

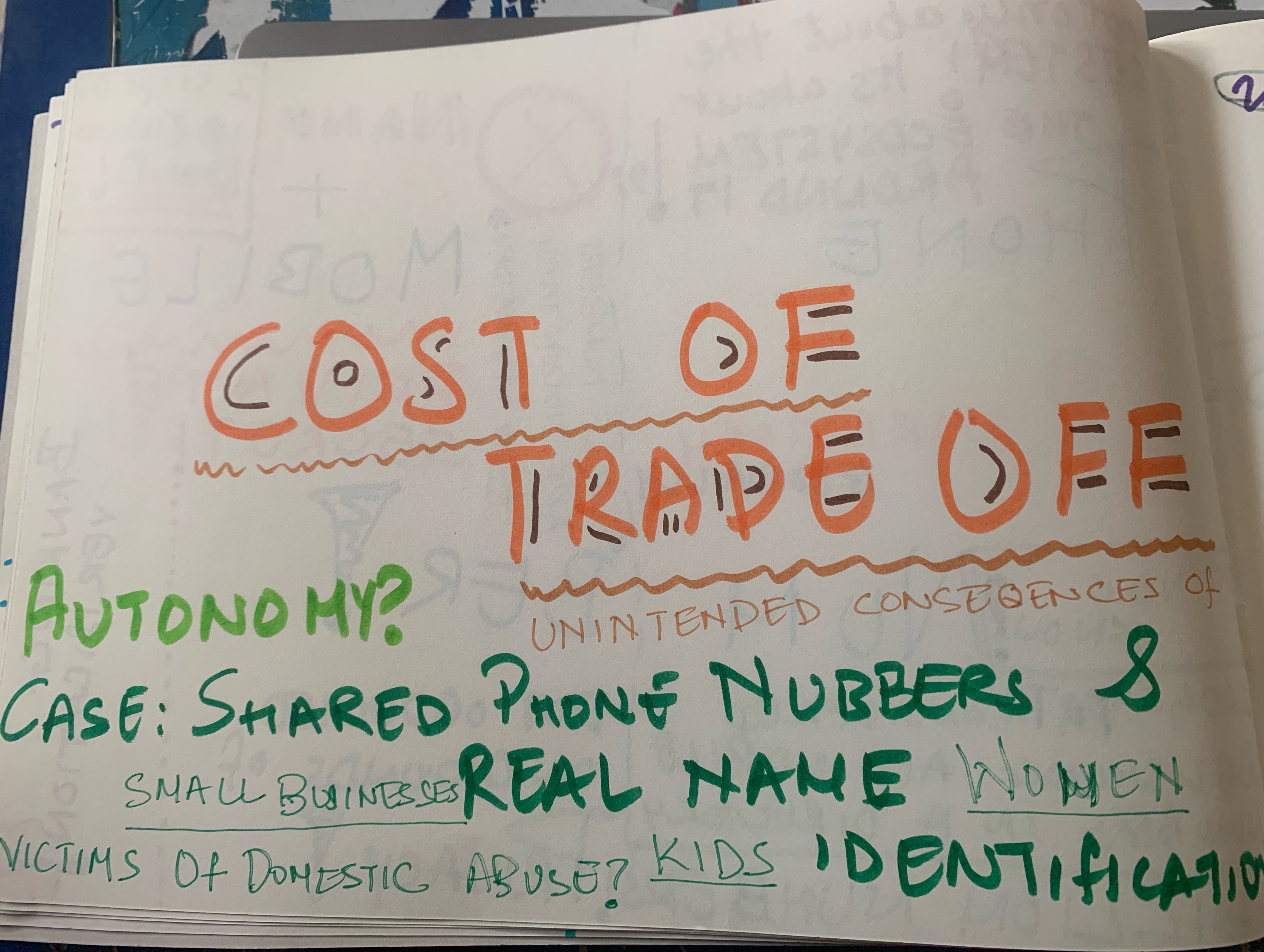

Artefact 19: The unintended costs of the trade off have to be considered. For example is the CNAPS – real name identification system trading off the autonomy of marginal communities to use numbers that belong to other family members. Kids who use their parents’ phone to study in the rural areas, small businesses run by women where they are using a family members’ ID to get access to phones, victims of domestic abuse who want to keep their identity private for fear of retribution or worse. Their autonomy to run their businesses or study or get peace of mind using whatever means they have is being broken.

Artefact 20: Correlation with harm is a big consideration when any interpretation of the policy is being done and proportionality of harm is being calculated. The standard proportionality test is a legal framework through which it can be calculated.

Proportionality is defined as a 3 pronged framework:

- State AIM or OBJECTIVE: Does it achieve what we want it to achieve?

(Aadhar: Creation of a unique identification.) - SUITABILITY or OTHER OPTIONS: Is it the only way to achieve what we want to achieve?

(Aadhar: All the alternates suggested, do they prove uniqueness of identification) - NECESSITY or CALCULATION of HARM: In achieving what we want to achieve, are we causing disproportionate harm to the rights of an individual?

The downside of using the proportionality test is that changing any parameter, be it objective or necessity, the whole test can fall apart. The government is free to identify the object, it changes everything else. But it is still a great metric for providing privacy jurisprudence. If the court could select the object instead of the government, it will perhaps select better objectives.

Artefact 21: Reciprocity and consent are deeply related to each other. You download Truecaller because you need to find a name attached to a phone number. Once identity is traded does the reciprocity also determine post verification period of use?

What will happen to the unlawfully collected data before regulation came into place? Reciprocity no longer implies consent after the rules have changed.

Role of Platforms & Service Providers

Artefact 22: In the new Digital India bill the onus of protecting the fundamental right to privacy is shifting to the shoulders of platform owners. Is it empowering users to benefit from their data? Is it burdening the platforms with unclear specifications on what needs to be done to protect it?

FEEDBACK LOOP – AMPLIFICATION AND INSTABILITY

Artefact 23: Anonymity is a spectrum where on one side lies the need to anonymity and the other the need for verification. Where a service or function falls is deeply dependent on context. Platforms today are doing the job of maintaining this balance albeit sub optimally.

The mandate for verification is likely to hit platforms in a negative way & will inhibit new user registrations. Ed-tech providers for instance do not want to verify the children or guardians. It will reduce the propensity of parents to sign up and that affects their bottom line and therefore their ability to provide the services that they do.

Platforms will extend to the metaverse tomorrow where convergence of multiple services is likely to happen. What platforms do today will set the role of metaverse providers tomorrow. And platforms need better guidelines on how to enhance privacy while maintaining the equilibrium they have.

The Ideal World

Artefact 24: There is no standard or universal approach towards privacy, to data and to disclosure. Let each transaction and the parties involved take the decision as to what is the best way for them to verify and secure users and at the same time be able to do business in an optimal way.

Artefact 25: A starting point for understanding the gradation of verification needed for different platforms can be a need based approach. If the need for verification can be matched to the exact requirement being implemented for a platform, it will help implement the verification requirement proportionally. It can can help inform the proportionality debate as well.

The need for each platform should be based on function of the platform. According to the function the gradation of harm, risk to the user, number of users at scale & knowledge of other alternatives should be included in the decision making on where each platform falls on the spectrum between anonymity and verification.

Artefact 4 (contd.): Kids will always push their boundaries and they need to be able to safely do so to learn about themselves and adapt to the world around them whether this includes accessing porn or counselling.

Verification may decrease the number of possibilities available to children not because of their appropriateness but because of the inability of the platform or service provider to be able to comply.

There need to be various different levels and boundaries at every stage and age that determine what is open to them. Not a single age restriction but different boundaries at each age bracket. What’s more parents can be very biased in their permissions, and can be expected to be unduly restrictive in certain cases and permissive in others.

At the policy stage automated policing should be mandated, not just at verification but throughout the time period of engagement, benchmarks for each age should be determined and recourse offered for those services which do not comply with the rules. At the infrastructure level, kids should be recognised using zero knowledge proof tactics, standardised benchmarks should be codified into the infra layer to ensure appropriate moderation & restrictions on content & interactions, anomalies should be reported to parents or authorities.

AI may be used to identify anomalies and build the benchmarks on an ongoing basis. Just as the dangers in the world are continuously increasing, especially with regards to children and their security, the system should also be able to learn and apply those learnings to setting of benchmarks and policing the internet so that it is safe for them to grow and thrive.

Artefact 1(contd.): Although a nuanced discussion may go a long way in understanding the issues but a fair share of activism is needed (from platforms and users) to ensure that the fundamental right to privacy remains sacrosanct and is not eroded by harsh implementation of misconstrued government wordings.

The only answer seems to lie in technology. Platforms & tech providers should share their technological advancements with the state and put in an effort to educate the system on the realm of current and state of the art possibilities. AI, homomorphic encryption, data poisoning, zero knowledge proof and other privacy enhancing technology can be used to integrate the guidelines in a better way if they work together. Mandated verification though loosely worded laws is not the only answer.

Artefact 25: To end with, the plurality of modern platforms is what makes a modern democracy. There needs to be plurality in the governance of the platforms, with as many variations as there are platforms. The context of each, proportionality, correlation with harm, reciprocity and consent should determine the level of user data to be validated, stored, shared and protected.

A trustful atmosphere atmosphere needs to be established with accountability at all levels, the users, the platforms, the regulatory and the government.

A one size fits all approach can not be applied to the scale and breadth of India and its many variations and colours.